We’re excited to announce the release of Griptape Framework 1.8! This major update delivers robust new capabilities for developers building with large language models and brings support for one of the AI ecosystem’s fastest-growing standards: the Model Context Protocol (MCP), now available as a tool type within Griptape Framework. This means that developers can now easily integrate a vast array of specialized tools and services directly into their Griptape agents, simplifying complex AI workflows and accelerating development by eliminating the need for custom integrations and "glue code."

Model Context Protocol (MCP) Servers as Tools

The centerpiece of Griptape 1.8 is full support for Model Context Protocol (MCP) as Tools. With MCP, Griptape agents can interact with any MCP-compliant service in a standardized way. This standardization means:

- Automatic Data Handling: Data (like documents, search results, or structured facts) can be passed between the agent and MCP tools without explicit serialization or deserialization code from the developer.

- Standardized Interfaces: Each MCP server exposes its capabilities through a well-defined protocol, so the Griptape agent inherently understands how to communicate with it, regardless of the underlying language or implementation of the MCP server.

- Reduced Integration Effort: Developers can simply declare an MCP tool within their Griptape agent, providing the connection details (e.g., URL for an SSE connection, or command for a Stdio connection). The Griptape Framework handles the low-level communication.

Note: Using MCP tools in Griptape 1.8 requires Python 3.10 or higher due to MCP's dependency on features introduced in this Python version. Please ensure your environment meets this prerequisite to exploit the new functionality.

Experimenting with MCP and Griptape Framework

To show MCP Servers as Tools in action, I created a Griptape agent equipped with tools using several different open-source MCP servers. Using these MCP servers, this agent has the capability to access PDF files stored on the local filesystem, convert those PDFs into a format suitable for use by LLMs, identify facts in those PDFs, and load those facts into a graph based memory store. This memory can be used by agents to answer questions related to the content of the PDFs without the need to pass large PDFs to a model as context. For testing, I used two large PDFs containing publicly available judgements related to a complex legal case.

The MCP servers that I used for this application were:

- Filesystem MCP Server: this allows agents to read PDF files from the local filesystem. As you will see in the code sample below, I configured it to access only a single directory on my local machine. I copied the two PDFs that I wanted to use for testing into this directory.

- pymupdf4llm: an MCP Server for pymupdf4llm, a tool for exporting PDFs to markdown, making them suitable for use by LLMs.

- Graphiti MCP Server: an experimental Model Context Protocol (MCP) server implementation for Graphiti. Graphiti is a framework for building and querying knowledge graphs, specifically tailored for AI agents operating in dynamic environments. This MCP server uses a Neo4j database and full setup instructions are available in the Graphiti Github repository. Using this MCP server requires some rules that you pass to your agent. A set of rules are provided in the repo for this MCP tool that focus on coding use-cases with cursor. I used Cursor to modify these rules for general purpose use by agents to store and retrieve facts.

I created a Griptape Agent with these MCP servers as tools using the code below:

from griptape.tools.mcp.tool import MCPTool

from griptape.tools.mcp.sessions import SSEConnection, StdioConnection

from griptape.structures.agent import Agent

from griptape.rules.rule import Rule

from griptape.drivers.prompt.anthropic import AnthropicPromptDriver

from griptape.utils.chat import Chat

graphiti_memory: SSEConnection = {

"transport": "sse",

"url": "http://localhost:8000/sse",

} # type: ignore

filesystem: StdioConnection = {

"transport": "stdio",

"command": "npx",

"args": ["-y",

"@modelcontextprotocol/server-filesystem",

"/Users/ian/Documents/mcp-server-filesystem"

]

} # type: ignore

pdf_to_markdown: StdioConnection = {

"transport": "stdio",

"command": "uvx",

"args": ["pymupdf4llm-mcp@latest",

"stdio"

]

} # type: ignore

agent = Agent(

prompt_driver=AnthropicPromptDriver(model="claude-sonnet-4-20250514"),

max_subtasks=100,

tools=[

MCPTool(name="GraphitiMemory", connection=graphiti_memory),

MCPTool(name="Filesystem", connection=filesystem),

MCPTool(name="PdfToMarkdown", connection=pdf_to_markdown)

],

rules=[

Rule(

value="""

INSERT GRAPHITI MCP USAGE RULES HERE

"""

)

]

)

Chat(agent).start()Using these MCP servers also illustrates the flexibility of the Griptape Framework’s MCP Servers as Tools features to work with different transport types and different languages for agents using the Stdio transport.

Running this application starts the agent in interactive chat mode. I started with the following prompt.

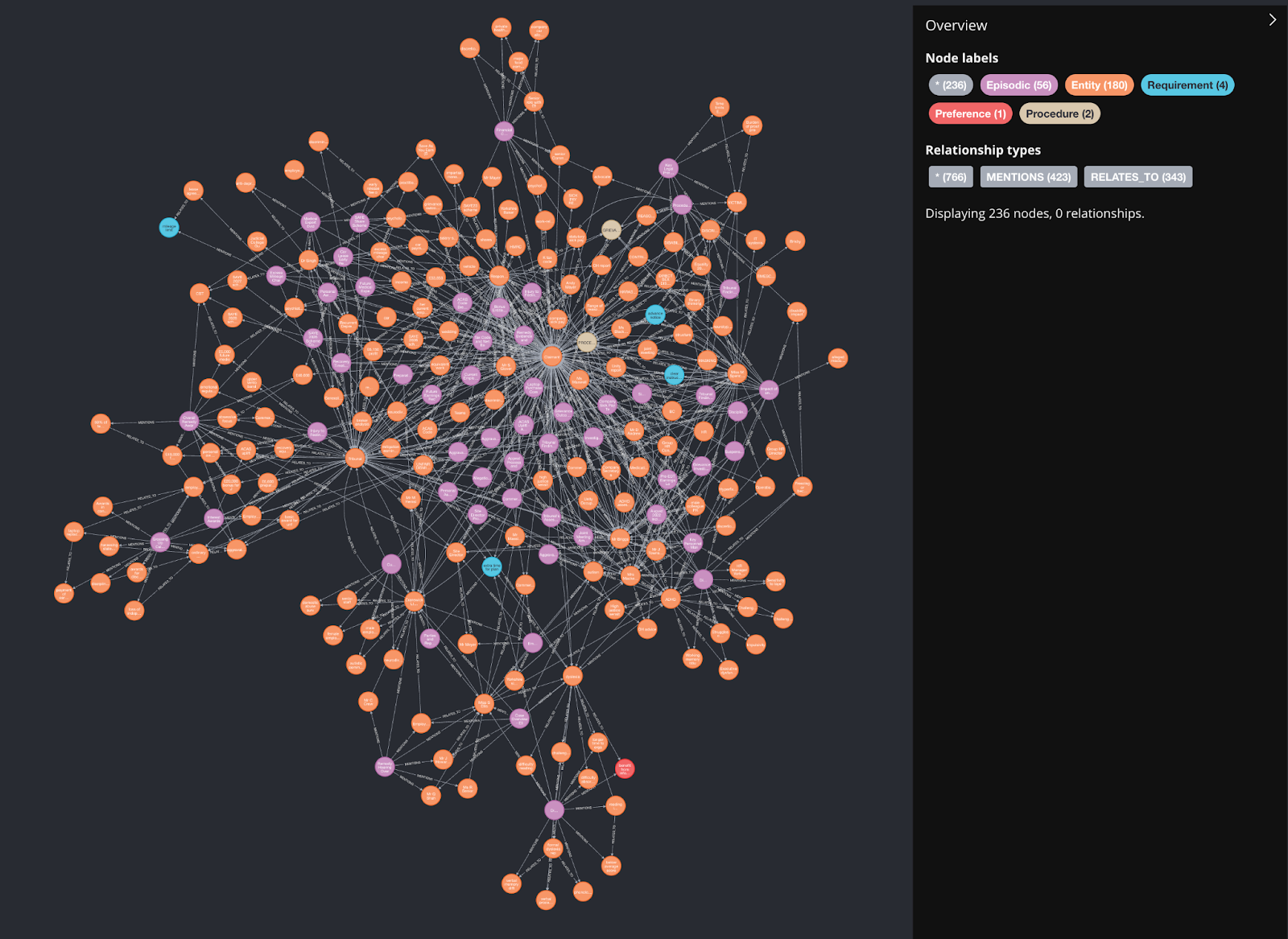

User: read the files judgement.pdf and remedy.pdf and load all the identified facts in those files into graphiti_memory.The agent uses the filesystem and PDF to markdown MCP Servers to read and convert the PDFs, saving them back to the local filesystem as an intermediate step. The agent then takes a few minutes to process the PDFs, during which I can observe the growth of the graph stored in Neo4j via the Neo4j browser.

Once the agent responds to confirm that it has completed the task I can check the graph again. This reveals that a large number of facts and the relationships between them have been identified and loaded into graph memory by the Graphiti MCP server.

I can then ask a complex question or series of questions about the material in the PDFs. I tried the following prompt:

User: what was the ruling on the preparation time order, what factors were considered material in this ruling, and what value was the preparation time award?The agent refers to its memory via the MCP Server, and after a few seconds returns the following response. I have removed a few identifying details as this is for illustrative purposes only.

Assistant: Based on the information gathered, I can now provide a concise answer to your questions about the preparation time order ruling.

## Ruling on the Preparation Time Order

The Tribunal awarded a preparation time order of X,XXX, calculated as ZZZ hours at a rate of YY per hour.

## Material Factors Considered in the Ruling

The Tribunal considered the following material factors in making this ruling:

1. The Respondent's unreasonable conduct during proceedings, including:

- Misleading response about witness statement withdrawal

- Unfair attempts to persuade the unrepresented Claimant to drop significant claim elements

- Misrepresenting the Claimant's interactions about the list of issues

- Unreasonable approach to hearing file preparation

- Making a second strike-out application on the final day of the liability hearing

2. The impact on the Claimant:

- The unreasonable conduct caused additional work and stress for the Claimant

3. Proportionality:

- The Tribunal found ZZZ hours (equivalent to BB working days) to be proportionate to the unreasonable conduct

As you can see from this example, using MCP Servers as Tools with Griptape Framework allows you to quickly create powerful LLM-powered agents that can tap into a wide range of capabilities such as third-party knowledge storage and retrieval tools, aggregator APIs, domain-specific calculators, and more, all via MCP. The Griptape Framework now acts as a first-class orchestrator in this emerging, standards-driven ecosystem.

Stay tuned for updates to Griptape Nodes now that this powerful new ecosystem is unlocked.

Looking Back: A Brief Recap of Griptape 1.7

While we’re excited to bring MCP Tools to Griptape Framework in the 1.8 release, it’s only fitting that we briefly recap the main changes we introduced in 1.7, especially as we didn’t cover them in-depth at the time.

Most of the improvements in 1.7 were focused on laying foundational support for upcoming Griptape Nodes features, as well as adding new integrations and enhancements for our partner, EDB. Some of the highlights of the 1.7 release were:

- EDB PG.AI Integration: Added a new EDB PG.AI Knowledge Base Vector Store Driver, providing support for EDB and making it easier to integrate EDB’s powerful database capabilities into your Griptape Framework based applications.

- Support for NVIDIA NIM: Added drivers for NVIDIA NIM embedding and reranking models.

- Griptape Nodes Foundational Improvements: Changes to support the development of Griptape Nodes, including support for OpenAI’s gpt-image-1 image generation model, URL and ImageURL artifacts, and partial support for ImageURL artifacts with prompt drivers.

- Updates to default models, bug fixes and stability improvements: As always, we rolled out a series of performance improvements and bug fixes to keep the framework fast and reliable.

While these 1.7 features were largely “under the hood,” they were important steps toward our vision of creating a robust, modular, and enterprise-ready platform for building, deploying, and managing sophisticated generative AI applications.

Get Started

Ready to try the new MCP tools? Update your existing Griptape Framework installation with uv, pip or your favorite package manager, using Python 3.10 or later, and check out the latest documentation for quick start examples.

We love to hear what you’re building with Griptape, so please share your projects and feedback in the Griptape Discord!

Final Notes

Griptape Framework 1.8 keeps you at the cutting edge of LLM application development. With MCP support, your agents can connect more easily to the broader tool ecosystem and flexibly respond to the evolving demands for generative AI workflows.

Stay tuned for deeper dives into MCP use cases and additional enhancements in future releases!

Happy coding from the Griptape team!