The creative landscape is evolving at breakneck speed, and generative AI is at the heart of this transformation. Whether you’re a digital artist, game designer, advertising creative, or VFX specialist, the tools you use can make or break your next big idea. That’s why Griptape Nodes continues to push boundaries.

Today, we’re pleased to announce improved support for Google’s most advanced AI models for video, image, and now, audio generation.

Since our last major update, we’ve listened to the creative community and we’ve supercharged the Griptape Nodes: Google AI library with powerful new features designed to unlock even more possibilities in your workflow. Here’s what’s new, and why you’ll want to dive in today.

Veo Image-to-Video Generator: Animate Your Art in Seconds

Imagine transforming a static image into a dynamic video sequence with just a few clicks. The new Veo Image-to-Video Generator node lets you do exactly that, leveraging Google’s cutting-edge Veo model. Simply feed in your image, whether it’s an original artwork, a concept render, or a storyboard frame, and watch as it comes to life. With base64 encoding support, you can easily integrate images from a variety of sources and fine-tune the animation with optional prompts, negative prompts, and seed controls for reproducibility. It’s never been easier to add motion to your creative vision.

In the example below, I'm using the ice cream truck that I generated with Google Imagen-4 Ultra model in the post where we introduced the Griptape Nodes: Google AI Library. I use the same prompt that I used to generate the image for the image-to-video model.

A rusty blue pickup truck crafted entirely from textured ice cream, with melting drips and creamy swirls forming its body. The truck's "rust" appears as patches of chocolate fudge and caramel streaks. It races energetically along a street in a futuristic city surrounded by neon illuminated skyscrapers kicking up sprinkles and crushed waffle cone debris. Emphasize the contrast between the cold, creamy truck and the dynamic motion, capturing playful whimsy and detailed textures of ice cream blending with mechanical elements.

I set the model to veo-3.0-generate-001, the output resolution to 1080p, and the number of videos to 4. Google's model API is somewhat unusual in that it has support for multiple generations, which is a cool feature if you want to generate a few options and then select the one that is best for your specific use. We display the four generated videos in a new node that is added in this update, more on that a little later in this post. These videos are generated with sound, so remember to turn up your volume before you hit play on the video below that shows the results that I got from running the workflow with these settings.

This node also works with images from any other source. For this second example, I generated an image using Black Forest Labs' FLUX.1 Kontext [pro] model using the following prompt.

An astronaut in a spacesuit walking slowly across a rocky, lunar-like landscape towards a large rock archway, leaving footprints in the dust of the planet's surface. Beyond the arch, a massive planet with swirling orange and white clouds is visible in the starry sky, slowly rotating to reveal its shifting surface patterns. The camera is behind the astronaut as the astronaut walks steadily towards the arch, their shadow shifting on the ground. The scene captures a sense of awe and exploration in a desolate, otherworldly environment

I used the same prompt, along with the image and same settings as above to generate video from this image, which created the video below.

Lyria Audio Generator: Original Soundtracks, No Copyright Headaches

Audio is the soul of any immersive experience, and now you can generate 30-second instrumental tracks using Google’s Lyria model, directly within Griptape Nodes. The Lyria Audio Generator is designed with creative professionals in mind, offering prompt guidance to help you avoid copyright pitfalls. Describe the mood, instrumentation, or atmosphere you want, and let the AI compose something unique. With high-quality 48kHz WAV output and seed-based reproducibility, you can experiment freely and find the perfect sound for your project, worry-free.

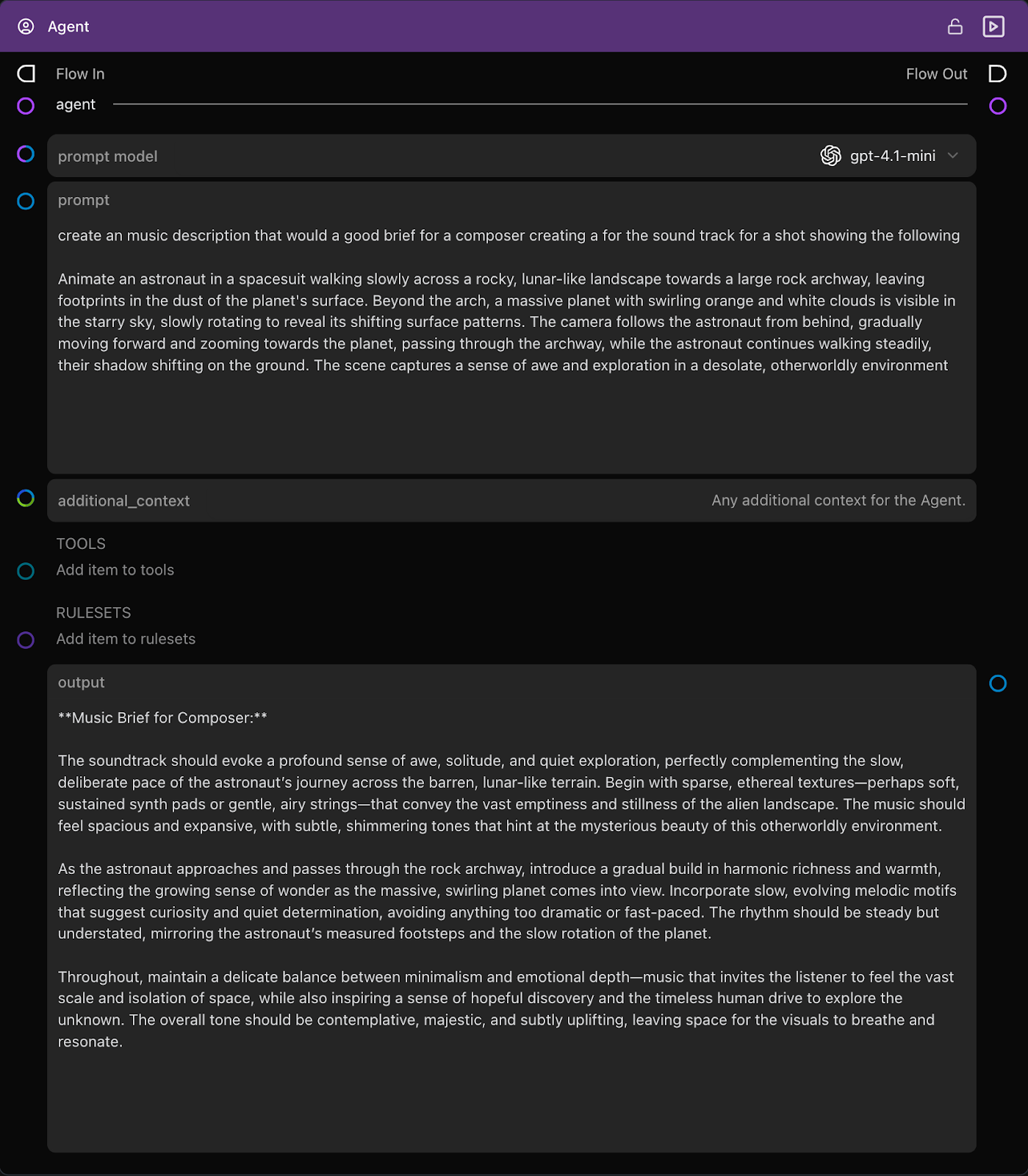

To generate some music scene containing our moon-walking astronaut, I decided to use an Agent in Griptape Nodes. You can see the prompt and the output that was generated by the agent in this image.

I passed the generated output to a Lyria Audio Generator node. You can hear the generated audio below. I am really, really happy with the results I got here. It evokes memories of 2001: A Space Odyssey.

Multi Video Display: Compare, Curate, and Collaborate

Managing multiple video outputs just got a whole lot easier. The new Multi Video Display node arranges your generated videos in a dynamic grid layout, with individual output ports for each position. This means you can instantly compare different versions, curate the best takes, or share specific outputs with collaborators, all from a single, intuitive interface. Real-time UI updates and intelligent grid management keep your workflow smooth and organized, even as your creative ambitions scale.

Multi Audio Display: Sound Design, Streamlined

Audio experimentation often means juggling multiple tracks and iterations. The Multi Audio Display node brings order to the chaos, displaying your generated audio clips in a grid with individual output ports for each. Whether you’re layering soundscapes, testing variations, or presenting options to a client, this node makes it effortless to manage and audition your audio assets. It’s compatible with standard audio formats and integrates seamlessly with the rest of your Griptape Nodes workflow.

How to get started?

Griptape Nodes isn’t just about access to powerful AI. It’s about empowering creative professionals to work faster, smarter, and with fewer technical barriers. With streamlined authentication, dynamic display nodes, and support for the latest Google AI models, you can focus on what matters most: bringing your ideas to life.Sign up for Griptape Nodes today and experience the future of creative AI. Whether you’re animating images, composing original music, or orchestrating complex multimedia projects, these new features are your ticket to a more inspired, efficient, and boundary-pushing workflow.

Visit griptapenodes.com to get started, explore the documentation, and join a community of forward-thinking creators on our Discord. Have questions or want to share your latest masterpiece? We can’t wait to see what you’ll create next!

The Griptape Nodes: Google AI Library is available on GitHub and you will find full installation and setup instructions in the Griptape Nodes: Google AI Library GitHub repo.